DISCOVER and NETWORK

Program

Timetable

08:00 – 09:00 AM Registration and Coffee/Pastries

09:00 – 09:15 AM Introduction

09:15 – 10:55 AM Session #1 – The Nitty-Gritty

10:55 – 11:15 AM Morning break

11:15 – 12:30 AM Session #2 – Learnings

12:30 – 02:00 PM Lunch

02:00 – 03:00 PM Keynote

03:00 – 03:20 PM Afternoon break

03:20 – 04:35 PM Session #3 – Artist Tools

04:35 – 04:40 PM Closing Remarks

04:40 – 05:10 PM Discussion

05:00 – 07:00 PM Reception

Program

Session #1: The Nitty-Gritty

Physically Based Lens Flare Rendering in “The Lego Movie 2”

Erik Pekkarinen (Animal Logic); Michael Balzer (Animal Logic)

We present our approach for incorporating realistic lens flare rendering in a production renderer based on a previously presented physically based lens simulation technique [Hullin et al. 2012]. We describe the approximations and sampling techniques behind efficient lens flare rendering, in addition to introducing flexible artist controls and workflows for this purpose. Using “The Lego Movie 2: The Second Part” as a case study, we show that these approaches are efficient and work well in a production environment.

Sharp Kelvinlets: Elastic Deformations with Cusps and Localized Falloffs

Fernando de Goes (Pixar Animation Studios);Doug L. James (Pixar Animation Studios, Stanford University)

In this work, we present an extension of the regularized Kelvinlet technique suited to non-smooth, cusp-like edits. Our approach is based on a novel multi-scale convolution scheme that layers Kelvinlet deformations into a finite but spiky solution, thus offering physically based volume sculpting with sharp falloff profiles. We also show that the Laplacian operator provides a simple and effective way to achieve elastic displacements with fast far-field decay, thereby avoiding the need for multi-scale extrapolation. Finally, we combine the multi-scale convolution and Laplacian machinery to produce Sharp Kelvinlets, a new family of analytic fundamental solutions of linear elasticity with control over both the locality and the spikiness of the brush profile. Closed-form expressions and reference implementation are also provided.

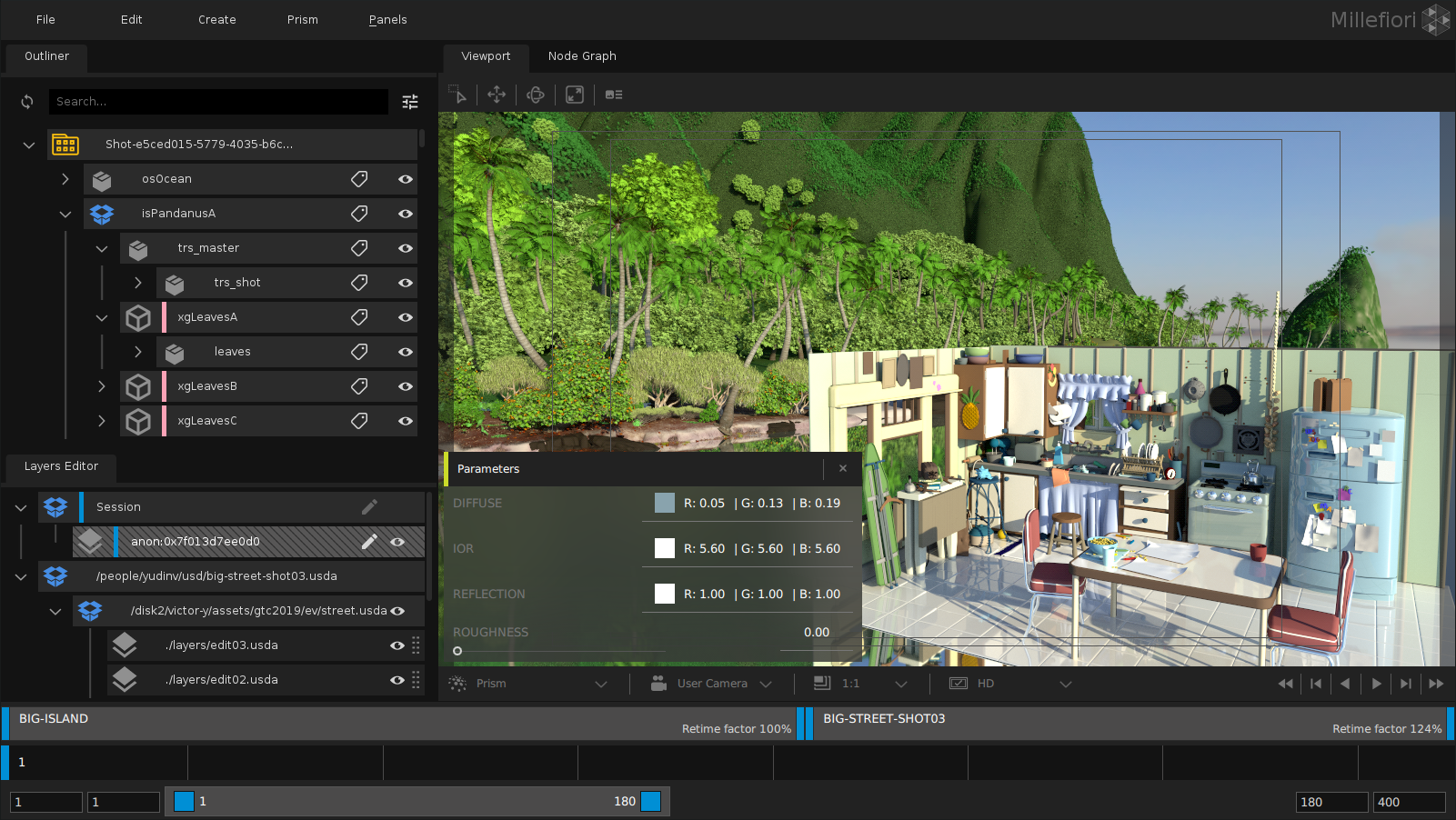

Millefiori: A USD-Based Sequence Editor

Victor Yudin (Mill Film); Gregory Ducatel (Mill Film)

Millefiori is a visual effects application designed to allow users to view and edit a series of large scenes, leveraging Pixar Universal Scene Description in its core, and Qt/QML for the UI components. While Millefiori was initiated as a sequence editor, its USD-based core has since formed the basis of the entire pipeline at Mill Film, from editing a USD stage to generating a review QuickTime. Development of the technology was a successful collaboration of developers led by Mill Film, MPC R&D, and Technicolor Research and Innovation.

Distributed Multi-Context Interactive Rendering

Alex Gerveshi (DreamWorks Animation); Sean Looper (DreamWorks Animation)

By enabling artists to work interactively with multiple active renders from a single application, new lighting and surfacing workflows were made possible. This technique was implemented by replacing Katana’s interactive-render mechanism, and by leveraging Arras, DreamWorks’ in-house cloud computation system.

Session #2: Learnings

Creating Interactive VR Narratives through Experimentation and Learning

Larry Cutler (Baobab Studios); Eric Darnell (Baobab Studios); Nathaniel Dirksen (Baobab Studios); Michael Hutchinson (Baobab Studios); Robert Schiewe (Baobab Studios)

Virtual Reality (VR) is a transformative medium for narrative storytelling where content creators can place the viewers inside the story and give them a role to play. Immersive storytelling is fundamentally different from film and games. It requires a new creative toolset that is still in its infancy compared to previous mediums.

We present our studio’s approach and evolution in developing interactive VR animated narratives spanning four immersive projects: Invasion!, Asteroids!, Crow: The Legend, and Bonfire. We provide a behind the scenes look at our many experimentations, failures, and learnings during the past four projects. We delve into the many challenges in staging and direction for a 360 space where the viewer can be anywhere and look anywhere. We explore the role of the viewer in our projects. Finally, we dive into our experiments in building meaningful connections with characters through interactivity.

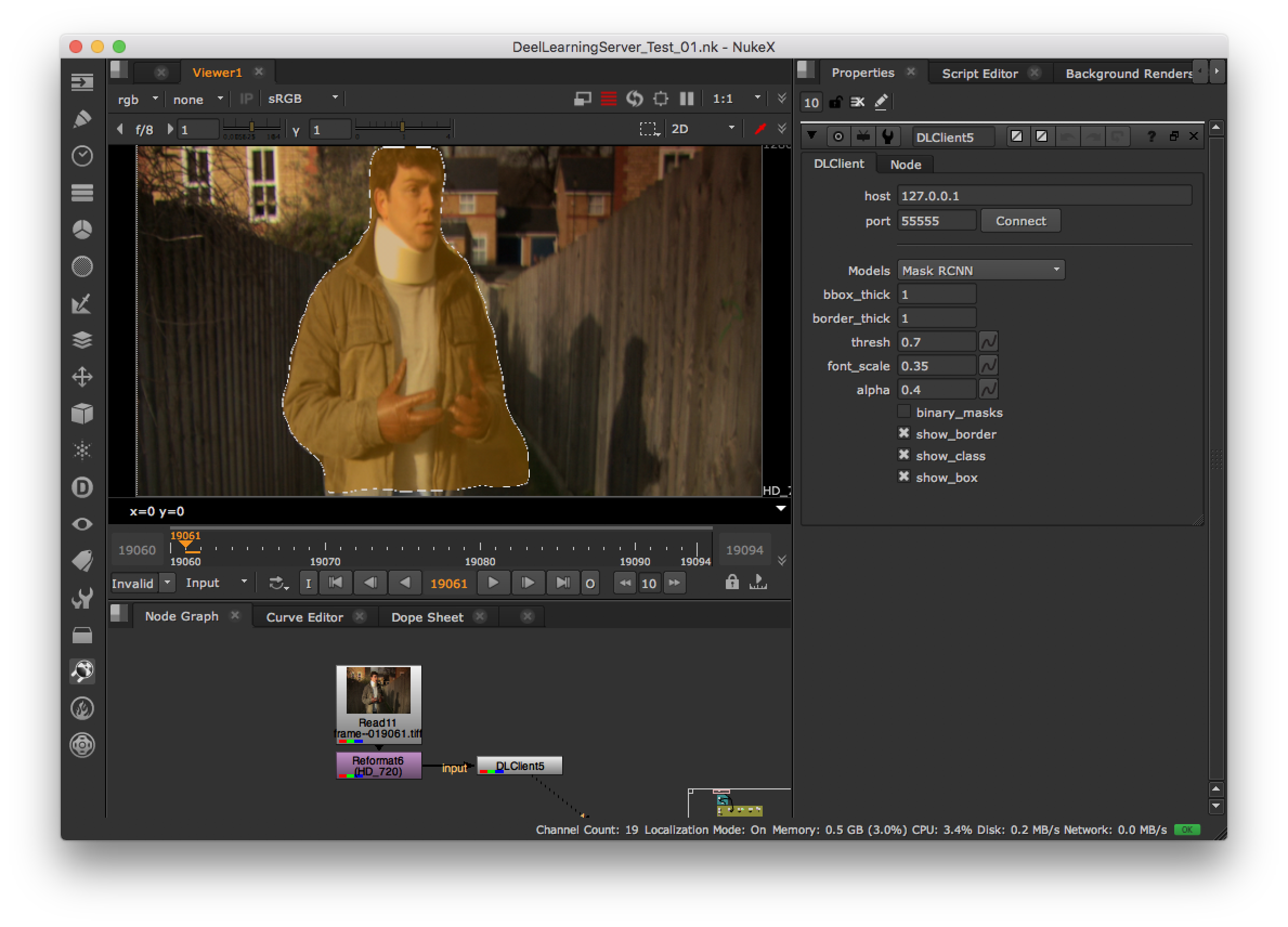

Jumping in at the Deep End: How to Experiment with Machine Learning in Post-Production Software

Dan Ring (Foundry); Johanna Barbier (Foundry); Guillaume Gales (Foundry); Ben Kent (Foundry); Sebastian Lutz (Trinity College Dublin)

Recent years has seen an explosion in Machine Learning (ML) research. The challenge is nowto transfer these newalgorithms into the hands of artists and TD’s in visual effects and animation studios, so that they can start experimenting with ML within their existing pipelines. This paper presents some of the current challenges to experimentation and deployment of ML frameworks in the postproduction industry. It introduces our open-source “ML-Server” client / server system as an answer to enabling rapid prototyping, experimentation and development of ML models in post-production software. Data, code and examples for the system can be found on the GitHub repository page: https://github.com/TheFoundryVisionmongers/nuke-ML-server

ASWF Technical Advisory Committee: How to Enable an Open Source Community

Daniel Heckenberg (Academy Software Foundation); Jean-Francois Panisset (Academy Software Foundation); Emily Olin (Academy Software Foundation)

The Technical Advisory Committee (TAC) coordinates technical efforts in the Academy Software Foundation (ASWF). Launched in August 2018, the ASWF’s purpose is to support open source software development in the motion picture content creation industry. Software engineers from the Foundation’s members and software projects form the TAC. It plays a key role in establishing and promulgating best practices, proposing and scrutinizing candidate projects and establishing shared resoures such as Continuous Integration infrastructure.

Session #3: Artist Tools

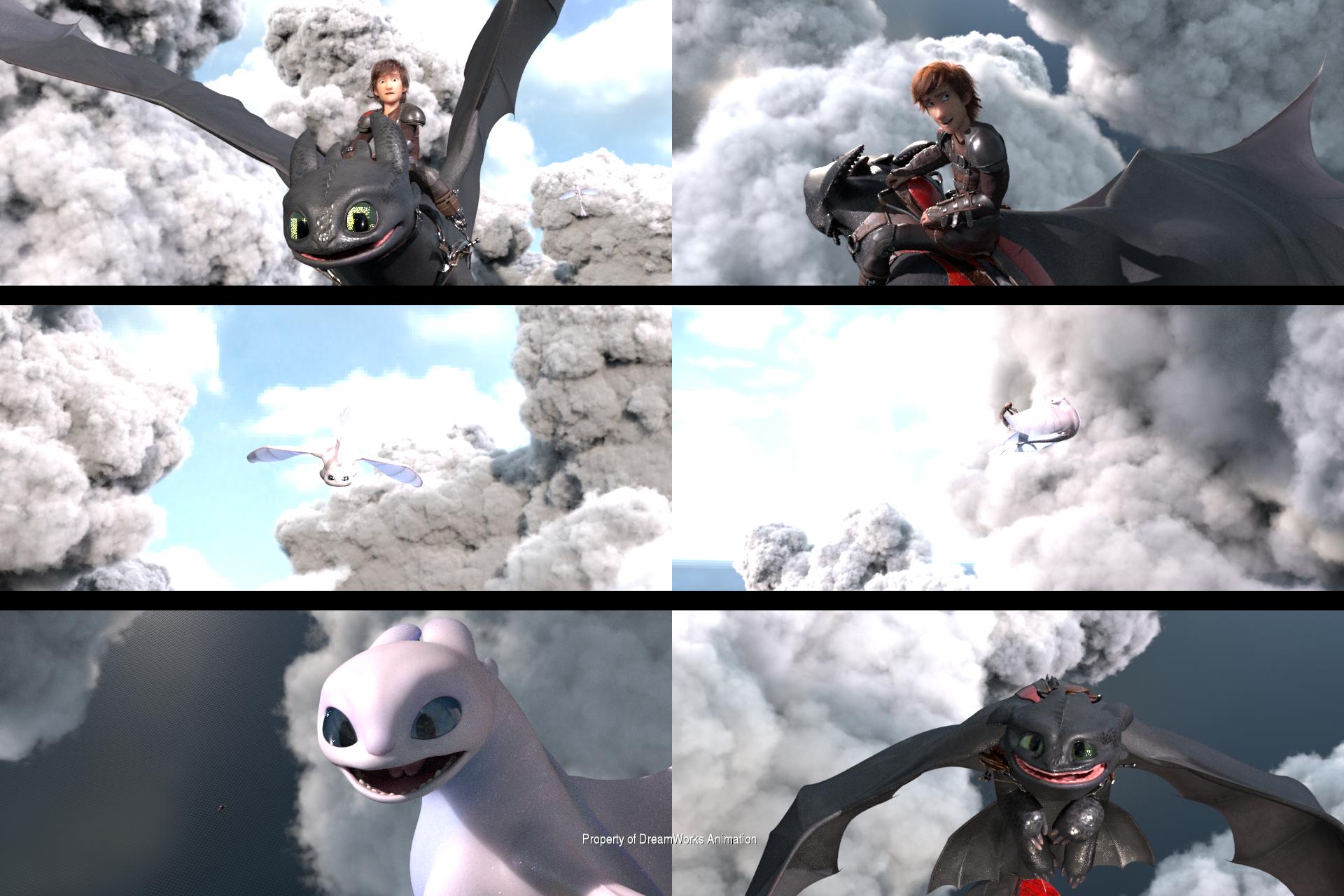

Skunk: DreamWorks Fur Motion System

Nicholas Augello (Dreamworks Animation); Arunachalam Somasundaram (Dreamworks Animation)

This talk presents DreamWorks’ fur motion system Skunk which is used to produce motion for fur on characters, garments, and props. Skunk’s ease of use, speed, stability, interactive nature, flexible framework, layered simulation approach, on the fly fur setup capabilities, consistency, and artist controls pushed boundaries of fur motion and interaction, and expanded artist usage at DreamWorks. The system was widely used in the film How to Train Your Dragon: The Hidden World, the short Bilby, and is being used on current feature films and shorts at DreamWorks.

Scriptable Character FX Solution

Cameron Black (Walt Disney Animation Studios); Nicholas Burkard (Walt Disney Animation Studios); Dmitriy Pinskiy (Walt Disney Animation Studios)

We would like to present a scriptable interactive data manipulation tool, heavily used on Disney’s Moana and Ralph Breaks the Internet. Its expression-driven interface makes it a versatile “Swiss Army Knife” for Technical Animation: a single tool with many functions, which could be applied to hair, cloth, and final cleanup tasks. Consequently, this provides a tremendous benefit for both developers and end users. The single code base can be maintained and upgraded effeciently. The artists, familiar with the tool in the context of one task, can take full advantage of the flexible interface and easily apply the tool to another task.

SpLit: Interactive light dialing as creative vehicle

Orde Stevanoski (Sony Pictures Imageworks); Larry Gritz (Sony Pictures Imageworks)

In this talk we present SpLit, a lighting manipulation tool created at Sony Pictures Imageworks designed to encourage experimentation and creativity when creating and manipulating CG lighting via a novel and artist friendly visual interface. We discovered that artists are often discouraged from experimenting and making sweeping lighting changes due to the complexity of the currently available user interfaces for CG lighting.

Manipulating, rendering and evaluating the decisions is a cyclical process that requires multiple clicks through various UI elements,and the rendering takes time to resolve to a point where the results of the changes can be evaluated.

With SpLit we focused on tightening the manipulation and decision-making loop and bringing it into the realm of realtime to encourage experimentation and creative freedom.